SharePoint 2010 on Windows 10: Solving COMException / Unknown error (0x80005000)

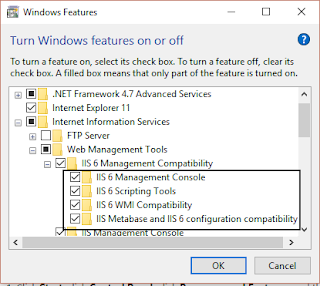

This is not a problem I expected to solve, but it happened anyway. A client is using SharePoint 2010 and I need a local development farm. I started with the usual configuration requirements: Add <Setting Id="AllowWindowsClientInstall" Value="True"/> to the Setup config Created a script to make a new configuration database so that I dont have to join a domain $secpasswd = ConvertTo-SecureString "MyVerySecurePassword" -AsPlainText -Force $mycreds = New-Object System.Management.Automation.PSCredential ("mydomain\administrator", $secpasswd) $guid = [guid]::NewGuid(); $database = "spconfig_$guid" New-SPConfigurationDatabase -DatabaseName $database -DatabaseServer myservername\SharePoint -FarmCredentials $mycreds -Passphrase (ConvertTo-SecureString "MyVerySecurePassword" -AsPlainText -force) The PowerShell script was generating the error. The solution is simple - you need to enable IIS 6.0 Management Compat...